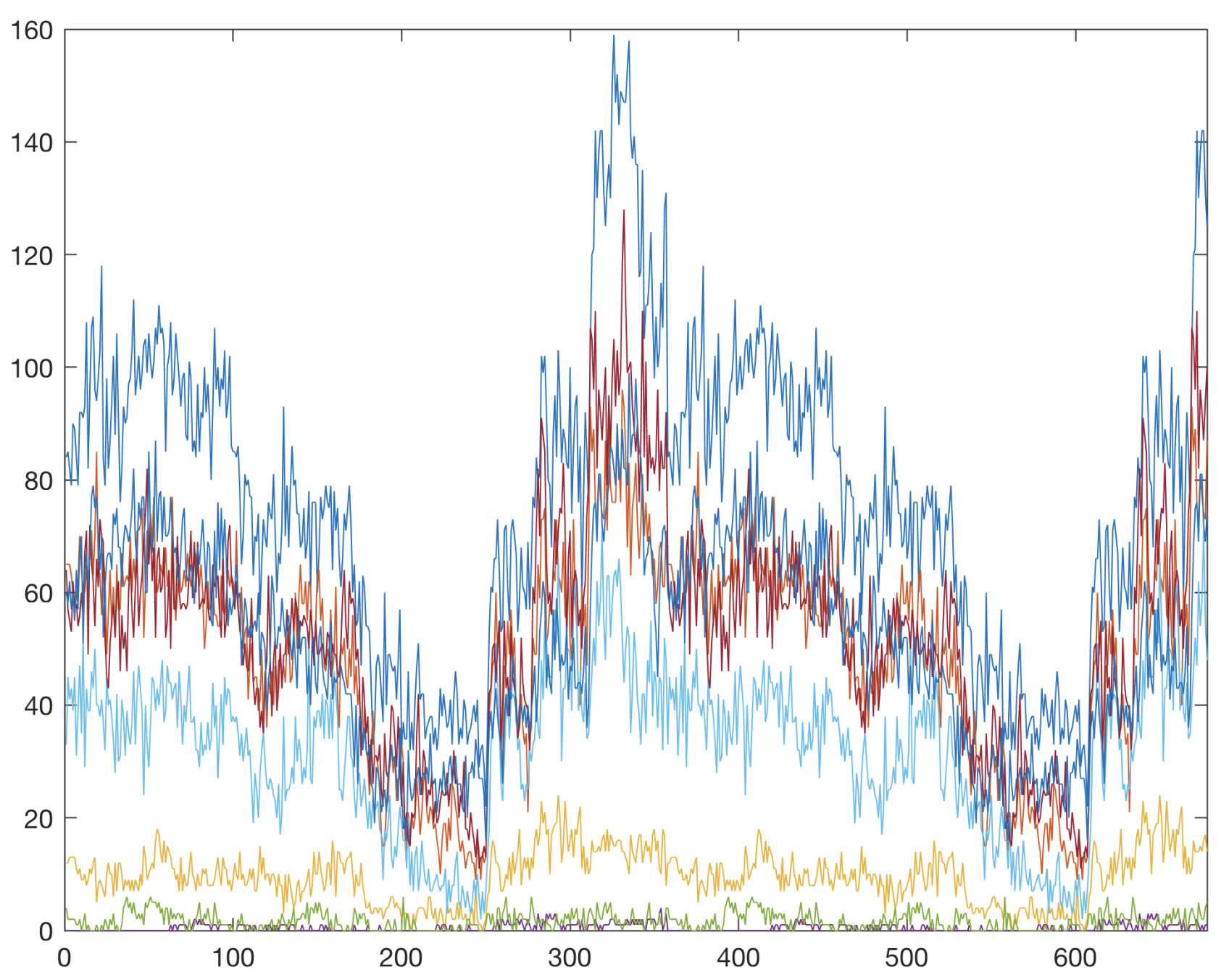

Computational Neurobiology Lab

The Salk Institute for Biological Studies

Programmer Analyst Intern

First author on the project's paper, published in the Journal of Speech Language and Hearing Research.

Software Engineer Intern

Enhanced a service status website to enable customers to

check for real-time internet maintenance and outage issues.

Created a consolidated website for two teams to compare

business location services, internet plans, and user-products.

Utilized JS, TS, and React.